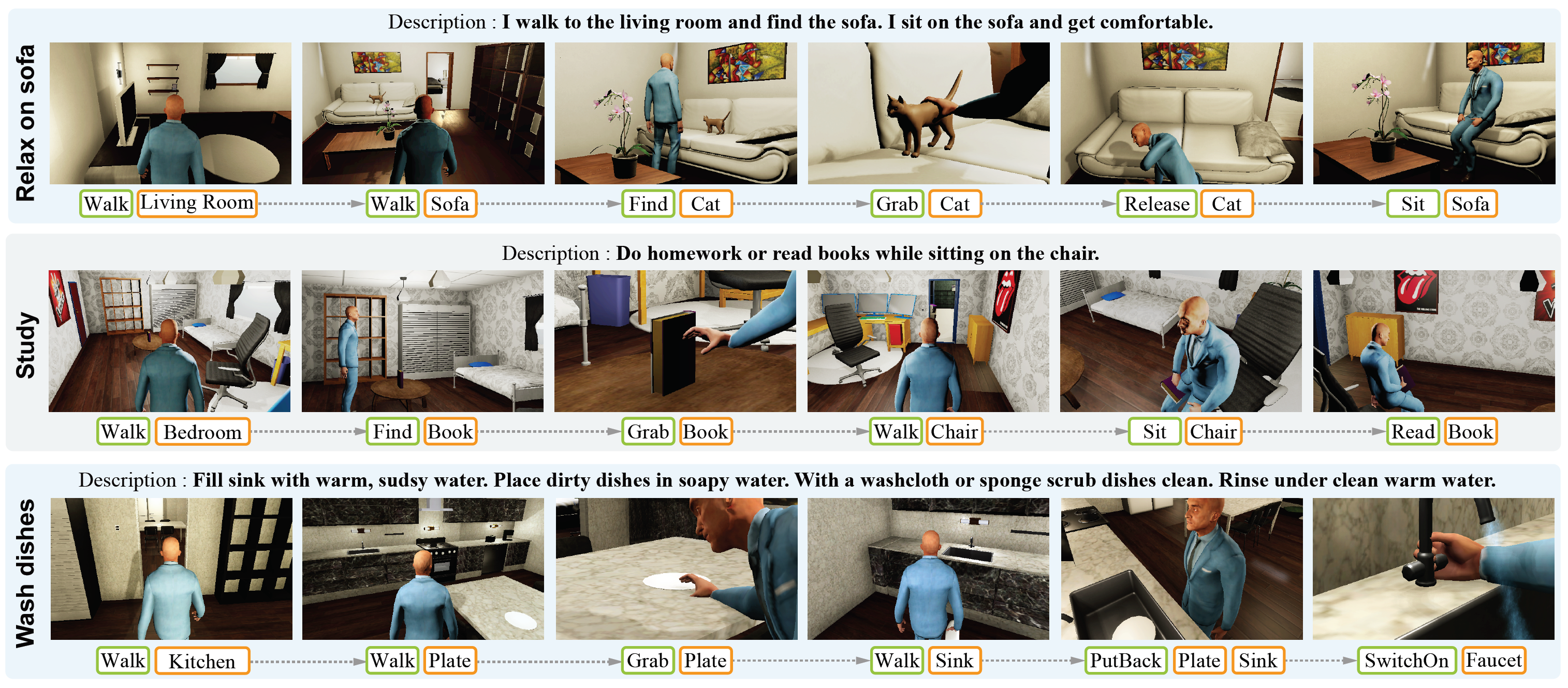

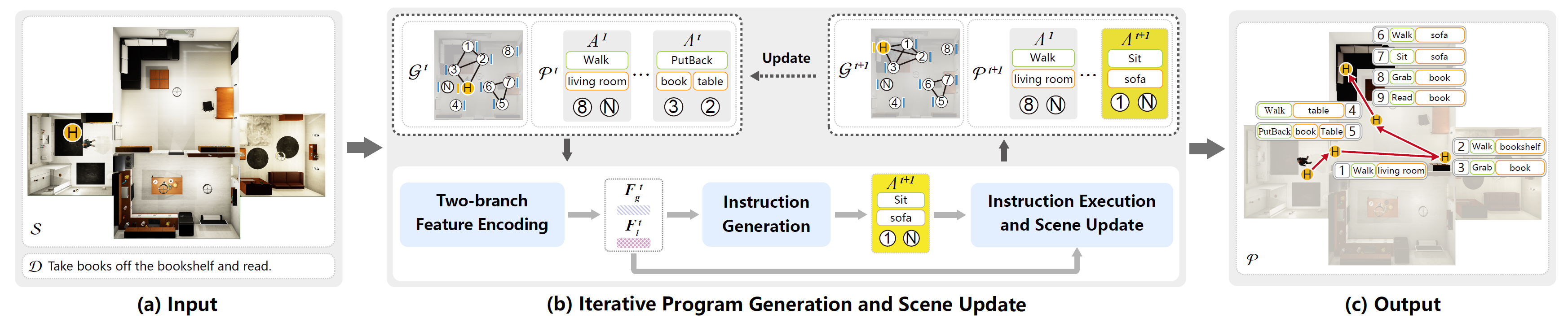

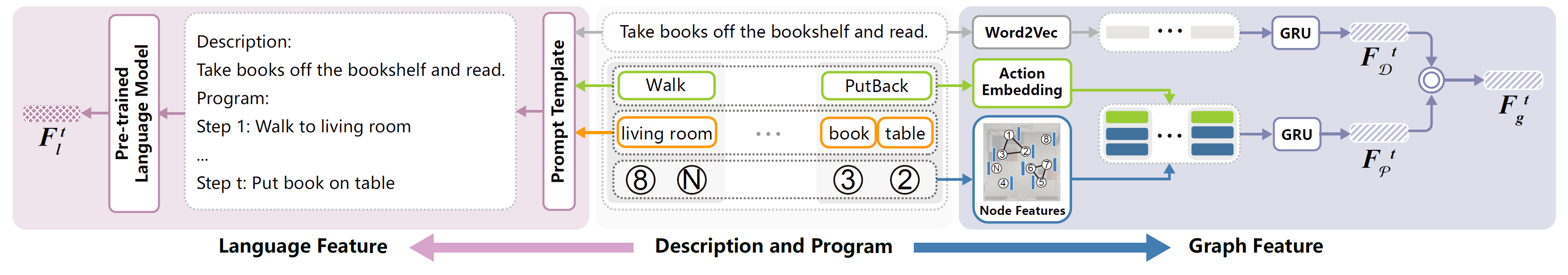

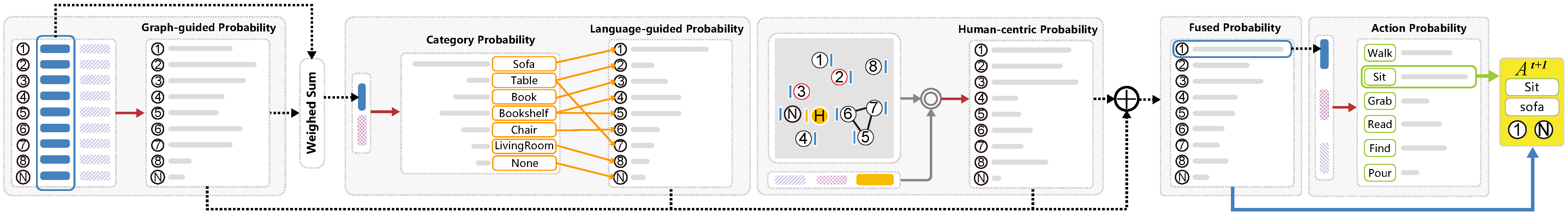

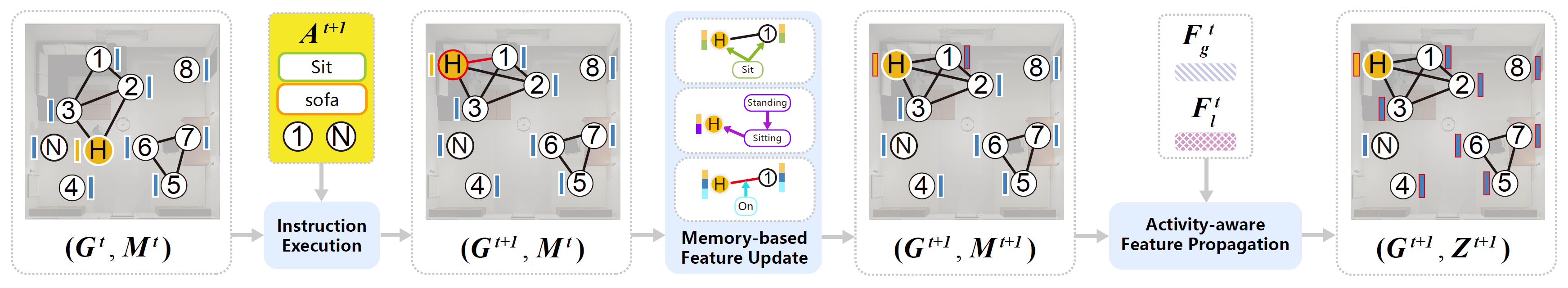

We address the problem of scene-aware activity program generation, which requires decomposing a given activity task into instructions that can be sequentially performed within a target scene to complete the activity. While existing methods have shown the ability to generate rational or executable programs, generating programs with both high rationality and executability still remains a challenge. Hence, we propose a novel method where the key idea is to explicitly combine the language rationality of a powerful language model with dynamic perception of the target scene where instructions are executed, to generate programs with high rationality and executability. Our method iteratively generates instructions for the activity program. Specifically, a two-branch feature encoder operates on a language-based and graph-based representation of the current generation progress to extract language features and scene graph features, respectively. These features are then used by a predictor to generate the next instruction in the program. Subsequently, another module performs the predicted action and updates the scene for perception in the next iteration. Extensive evaluations are conducted on the VirtualHome-Env dataset, showing the advantages of our method over previous work. Key algorithmic designs are validated through ablation studies, and results on other types of inputs are also presented to show the generalizability of our method.

We thank the anonymous reviewers for their valuable comments. This work was supported in parts by NSFC (62322207, U21B2023, U2001206),GDNatural Science Foundation (2021B1515020085), Shenzhen Science and Technology Program (RCYX20210609103121030), Tencent AI Lab Rhino-Bird Focused Research Program (RBFR2022013), NSERC Canada through a Discovery Grant and Guangdong Laboratory of Artificial Intelligence and Digital Economy (SZ).

@article{Su23LangGuidedProg,

title={Scene-aware Activity Program Generation with Language Guidance},

author={Zejia Su and Qingnan Fan and Xuelin Chen and Oliver van Kaick and Hui Huang and Ruizhen Hu},

journal={ACM Transactions on Graphics (Proceedings of SIGGRAPH ASIA)},

volume={42},

number={6},

pages={},

year={2023},

}